Results

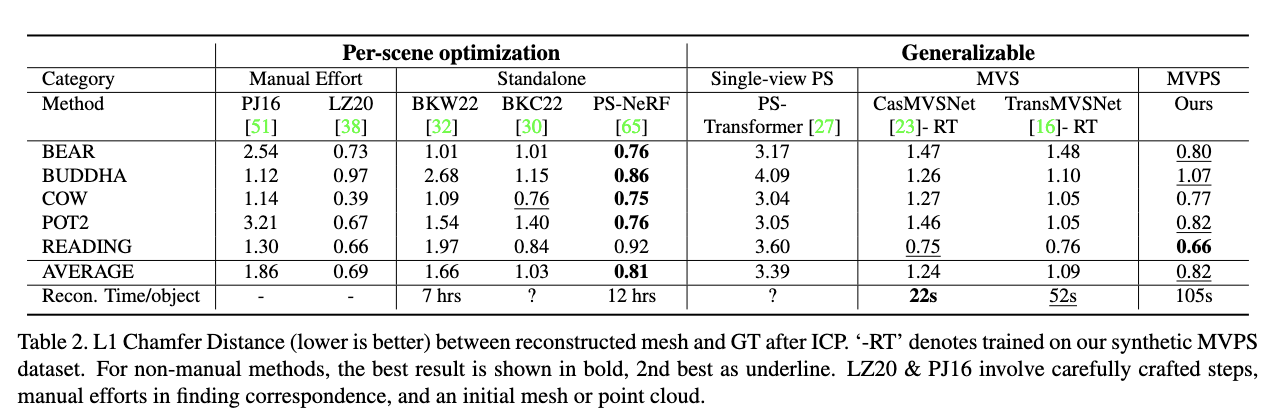

Results on DiLiGenT-MV

MVPSNet achieves comparable results to SOTA method while being fast and generalizable.

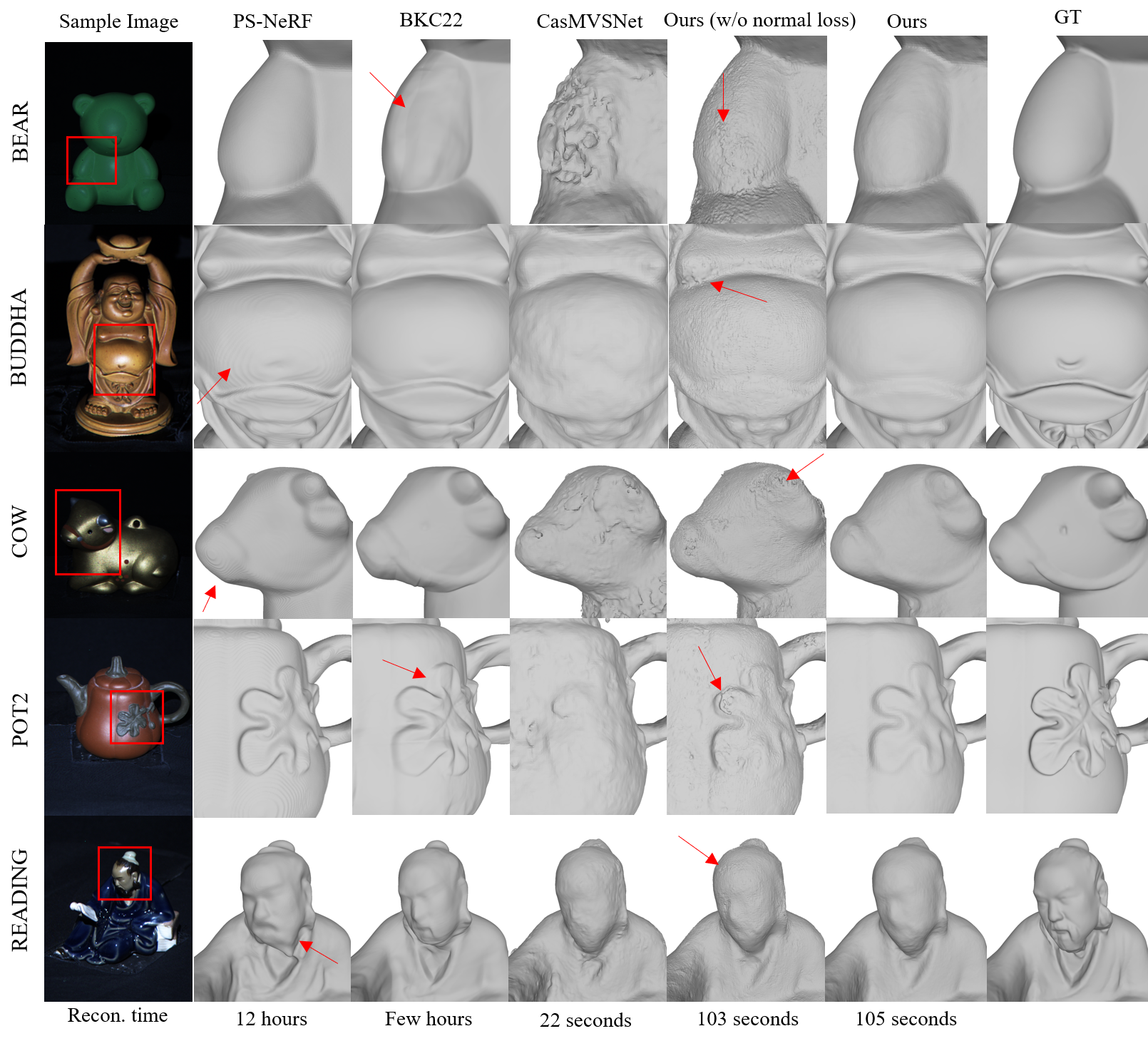

Result on real-world capture

Result of an example of real-world capture using a simple at-home setup: a user captures MVPS imagery by attaching a flash-light with a string to the tripod to move lights in a circle around the camera.